What Enterprise API Modernization Means in 2026 (Beyond “SOAP to REST”)

Enterprise API modernization in 2026 is no longer a question of replacing one interface style with another.

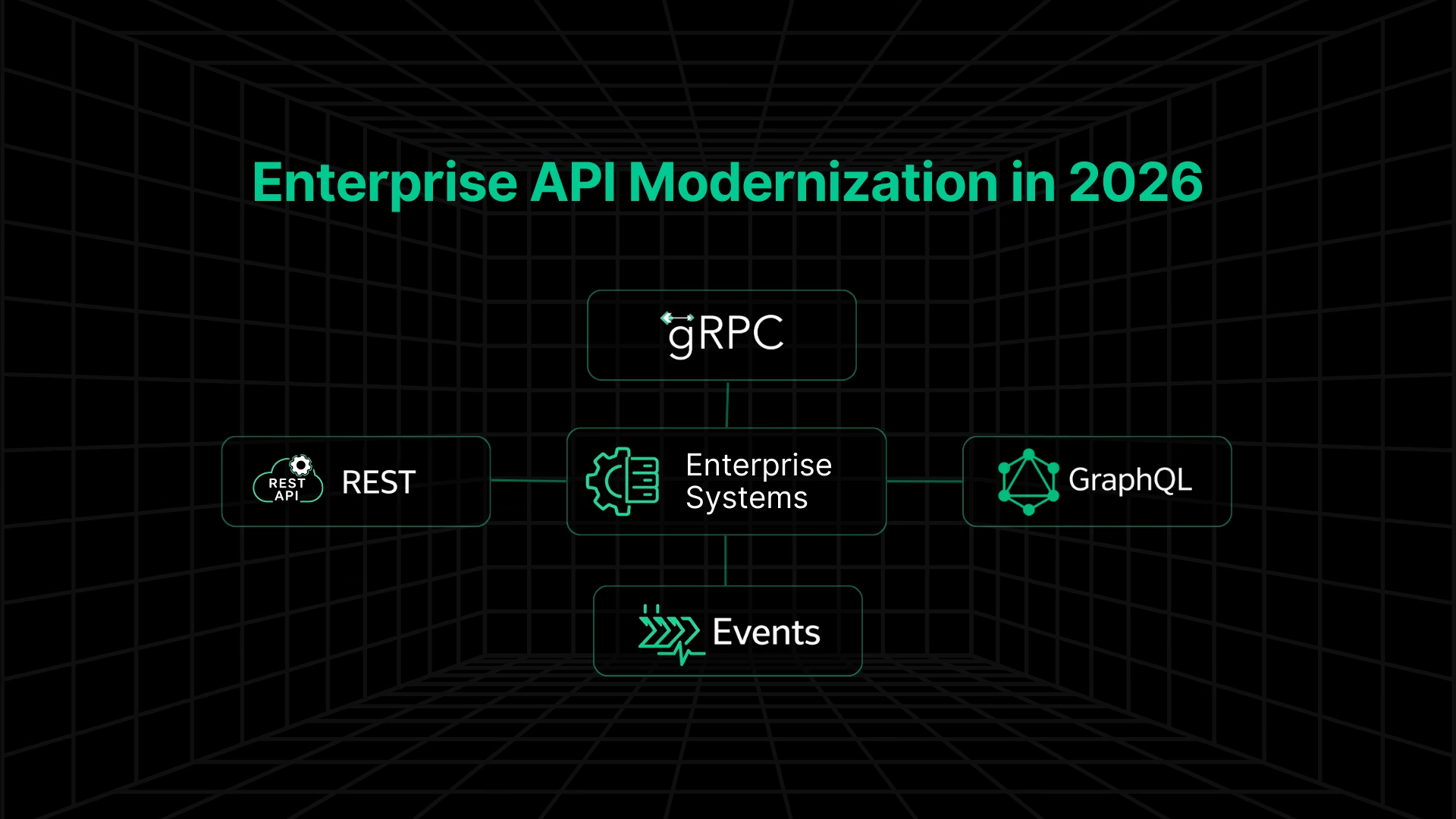

Most large organizations already operate with multiple API paradigms in parallel with SOAP services that still run revenue workflows, legacy REST APIs consumed by external partners, newer gRPC services for internal systems, GraphQL layers added for aggregation, and event streams introduced to decouple parts of the estate. Modernization now happens inside this, not instead of it.

What has fundamentally changed is tolerance for risk. APIs today have unknown or partially documented consumers, long-lived integrations outside the organization, and contractual expectations that cannot be broken without business impact.

The assumptions that shaped earlier API modernization efforts, such as clean cutovers, controlled consumers, and a limited blast radius, no longer hold. In 2026, even “small” changes propagate across systems, teams, and partners faster than governance models can react.

This is why enterprise API modernization must be treated as a system-level execution problem, not an interface upgrade or protocol decision. Success depends on understanding how APIs behave in production, how consumers rely on them, and where change will ripple before any modernization work begins.

Enterprise API modernization today succeeds or fails based on how well teams understand system behavior before change, and not on the protocol they choose.

Assessing the Current API Landscape Before You Change Anything

Most enterprises underestimate the complexity of their API landscape. Over time, APIs accumulate across stacks and generations, often without a single, reliable view of what exists, who consumes it, or what will break if behavior changes. Modernizing APIs without first addressing this gap is the most common way enterprise programs fail.

A practical API landscape assessment focuses on a small number of concrete dimensions:

1. API inventory across generations

- SOAP/WSDL services embedded in core workflows

- Legacy REST APIs exposed to partners or external clients

- Internal RPC-style interfaces that were never designed as stable contracts

2. Consumer visibility

- Internal consumers (services, batch jobs, UI layers)

- External or partner integrations outside direct team control

- Consumers that exist in production but are no longer actively owned

3. Dependency chains beyond services

- Downstream batch processes and scheduled jobs

- Data pipelines and reporting layers

- Frontend applications relying on undocumented behavior

4. Contract quality and drift

- WSDL or OpenAPI specs that no longer reflect runtime behavior

- Inconsistent or missing versioning

- Undocumented payload extensions and implicit assumptions

Most failed API migrations trace back to blind spots in one or more of these areas. Teams assume documentation is accurate, overlook indirect dependencies, or discover unknown consumers only after changes reach production. By that point, rollback is expensive and timelines are already compromised.

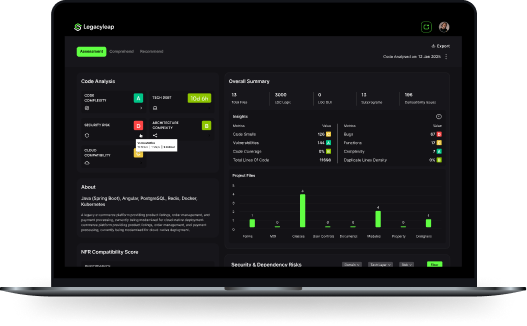

This is why assessment is the first irreversible step in safe enterprise API modernization. Interface choices, event strategies, and migration patterns are downstream decisions. This is also why enterprises increasingly rely on platforms like Legacyleap at the assessment stage, building a dependency-aware map of APIs, consumers, and contracts before any modernization decision is made.

Choosing The Right Interface Style: REST, gRPC, GraphQL, or Events

In enterprise environments, interface selection is rarely a clean, greenfield choice. REST, gRPC, GraphQL, and event-driven APIs each solve different problems and introduce different constraints. The mistake teams make is treating this as a stylistic or trend-driven decision, rather than one shaped by consumers, contracts, and operational realities.

A constraint-driven view helps keep these decisions grounded.

Key constraints to look out for

- Consumer types and environments: Browser-based clients, mobile apps, internal services, partners, and batch systems impose very different constraints on protocols, tooling, and backward compatibility.

- Latency and payload sensitivity: High-frequency internal calls, streaming workloads, and coarse-grained partner integrations tolerate latency and payload size very differently.

- Versioning and backward-compatibility tolerance: Some consumers can upgrade quickly; others cannot. This directly affects how often contracts can change and how long multiple versions must coexist.

- Caching, observability, and debugging requirements: The ability to inspect traffic, cache responses, trace failures, and diagnose issues in production varies significantly across interface styles.

Constraint-driven comparison (enterprise lens)

| Constraint / Consideration | REST | gRPC | GraphQL | Events |

| Primary consumer environments | Browsers, partners, external clients | Internal services, low-latency backends | Frontend aggregation layers | Asynchronous consumers, downstream systems |

| Payload and latency profile | Moderate payloads, higher overhead | Compact payloads, low latency | Variable payload size per query | Asynchronous, latency-tolerant |

| Versioning complexity | Medium; requires careful compatibility discipline | High; schema evolution must be tightly controlled | High; schema changes ripple quickly | High; consumers are loosely coupled but long-lived |

| Caching behavior | Well-supported via HTTP semantics | Limited, custom implementations | Non-trivial; query-level complexity | Not cache-oriented |

| Observability and debugging | Strong, mature tooling | More complex, tooling-dependent | Query-level visibility required | Requires event tracing and replay support |

| Typical enterprise usage pattern | External-facing and partner APIs | Internal service-to-service calls | API aggregation, frontend optimization | Decoupling workflows and data propagation |

In practice, most enterprises converge on hybrid interface models: REST for external stability, gRPC for internal efficiency, GraphQL where aggregation is unavoidable, and events to decouple workflows over time.

The risk is in mixing styles without a clear understanding of how contracts evolve, how consumers behave, and how changes are rolled out safely.

These choices only hold when contracts, consumers, and rollout mechanics are fully understood. Otherwise, interface selection remains theoretical.

Introducing Event-Driven APIs Without Breaking Existing Systems

Event-driven APIs are often introduced to reduce coupling and improve scalability, but in enterprise environments, they rarely replace existing request/response flows overnight. In practice, events are added alongside APIs that still power live business processes.

The challenge is adopting events without changing system behavior in ways consumers don’t expect.

Most enterprises begin by emitting events from legacy systems rather than re-architecting them. This allows downstream consumers to react asynchronously while existing synchronous APIs continue to serve current integrations.

For long stretches, request/response and async flows must coexist, sharing data models, business rules, and operational constraints. That coexistence is where most failures occur.

Common failure modes show up quickly:

- Silent consumer breakage: Events introduce new consumers that are loosely coupled and long-lived. When payloads change without visibility into who consumes them, breakage often goes undetected until downstream systems drift.

- Schema evolution mistakes: Event schemas tend to evolve faster than REST contracts, but compatibility rules are often weaker or inconsistently enforced. Small changes such as enum updates, field removals, and semantic shifts can cascade across consumers.

- Duplicate business logic: Without clear boundaries, teams re-implement validation or transformation logic in both synchronous APIs and event handlers, increasing inconsistency and maintenance cost.

This is why event-driven API modernization is high-interest and high-risk. Most guidance focuses on brokers and infrastructure like Kafka or RabbitMQ adoption, throughput, or tooling while underplaying sequencing, compatibility, and operational impact.

In reality, successful event introduction depends on understanding existing data flow, side effects, and consumer behavior before any new async path is exposed.This is where system-aware modernization approaches outperform script-based or rewrite-heavy efforts: event introduction is treated as a controlled extension of existing behavior, not a parallel system built in isolation.

Migration Execution Patterns That Reduce Risk (Strangler, Façade, Parallel Run)

By the time enterprises reach execution, the patterns are well known. What separates successful API migrations from stalled ones is how these patterns are applied under real system constraints, not whether teams are aware of them.

Most enterprise API migrations rely on a combination of three execution mechanics:

- Strangler patterns at the gateway or routing layer: New implementations are introduced incrementally while traffic is selectively routed away from legacy endpoints. This limits blast radius, but only works when routing decisions are grounded in an accurate view of consumers and dependencies.

- Façade patterns to shield consumers: A stable external contract is preserved while internal implementations evolve behind it. Façades reduce consumer churn, but they fail quickly when hidden assumptions about payloads or behavior aren’t surfaced early.

- Parallel run strategies with defined cutover checkpoints: Old and new implementations run side by side while outputs, behavior, and performance are compared. Without clear validation criteria and rollback points, parallel runs become expensive and inconclusive.

These patterns break down for the same reason: lack of contract and dependency clarity. Teams underestimate which consumers rely on which behaviors, overtrust outdated specifications, or discover late that a “minor” change affects downstream systems. At that point, execution patterns lose their protective value and become operational overhead.

This is where platforms like Legacyleap materially change outcomes by grounding migration patterns in verified dependencies, real contract behavior, and a measurable blast radius rather than assumptions. Execution shifts from pattern-driven experimentation to controlled change, where risk is understood before traffic is moved.

For enterprise API modernization, execution patterns are not checklists to follow; they are risk controls. Used without system-level understanding, they provide false confidence. Used with it, they make incremental modernization viable at scale.

Contracts, Compatibility, and Validation During API Modernization

This is the phase where enterprise API modernization either becomes predictable or unstable. Most overruns and rollbacks don’t come from architecture choices; they come from breaking contracts teams assumed were safe.

At scale, contracts are not documentation artifacts. They are operating agreements between systems that evolve at different speeds.

The following is what enterprise-grade compatibility requires.

Versioning discipline, not version sprawl

- Backward-compatible changes as the default, not the exception

- Clear rules on what is contractual vs incidental behavior

- Explicit deprecation paths instead of silent behavior changes

Schema evolution that respects consumers

- REST schemas that evolve additively without semantic drift

- Protobuf and gRPC schemas that follow strict wire-compatibility rules

- Event schemas treated as long-lived contracts, not internal payloads

In practice, most teams underestimate how slowly consumers upgrade and overestimate how well those rules are enforced.

Why traditional testing breaks down here

Unit and integration tests answer one question: Does the service work on its own?

They do not answer the question modernization actually depends on: Will existing consumers continue to behave correctly after this change?

That gap becomes critical during parallel runs, when legacy and modernized implementations coexist, and subtle differences creep in. Manual validation doesn’t scale, and spot checks miss edge cases that only appear under real traffic.

This is why enterprise programs lean on validation mechanisms designed for change, not correctness:

- Consumer-driven contract testing to verify expectations from the consumer’s point of view

- Payload diffs between legacy and modernized paths to detect behavioral drift

- Runtime monitoring during cutover to catch breakage before traffic is fully shifted

Where Legacyleap changes the equation

This is where Legacyleap becomes central to execution as a compatibility control layer.

Legacyleap combines:

- System-level code comprehension to understand what the system actually does

- Automated contract validation and test generation grounded in real behavior

- Continuous verification during parallel runs, not after the fact

The result is less manual verification, fewer late surprises, and earlier detection of breaking changes before they reach production traffic.

For enterprise leaders, this phase isn’t about test coverage. It’s about confidence. When contracts are explicit, compatibility rules are enforced, and validation is continuous, API modernization becomes a controlled process.

Without that, no matter how carefully the architecture was chosen, every release is a gamble.

Security, Governance, and Cost Controls

In enterprise settings, API modernization rarely fails on technical feasibility. It slows down when leadership is unsure whether security exposure, governance gaps, or operating costs will increase after the change. That hesitation usually comes from treating these concerns as post-migration work instead of execution constraints.

Modernization surfaces governance issues that already exist. As APIs are routed through shared gateways and standardized contracts, inconsistencies become visible, such as:

- OAuth2/OIDC scopes that no longer match real access patterns,

- Uneven application of mTLS and zero-trust controls, and

- Limited insight into who is calling which APIs and at what volume.

The same pattern shows up with performance and cost.

- New interaction models change consumption behavior in ways functional testing doesn’t reveal.

- Caching strategies need to align with versioning and backward compatibility, not just response times.

- GraphQL introduces query-level cost exposure that must be controlled explicitly.

- Rate limits and throttling only work when they reflect real usage, not static assumptions carried forward from legacy systems.

When these controls are added after migration, teams are forced to revisit interfaces and rollout decisions they believed were complete. When they are enforced during execution, they act as guardrails by shaping cutover timing, limiting blast radius, and reducing rework.

This is why enterprises favor modernization approaches that embed security, governance, and cost controls into execution itself, rather than retrofitting them later. Platforms like Legacyleap reinforce this by treating enforcement and visibility as part of the modernization flow, not as a separate cleanup phase. The result is lower audit risk, predictable operating costs, and fewer surprises after go-live.

How Legacyleap Executes Enterprise API Modernization

Most API modernization efforts fail in the same places: incomplete system visibility, assumptions about contracts, and manual validation that doesn’t scale. Legacyleap is built specifically to intervene at those failure points as a different execution model.

At a high level, Legacyleap changes API modernization in three concrete ways.

- First, it grounds decisions in system-level understanding, not documentation. Instead of relying on WSDLs, OpenAPI specs, or tribal knowledge, Legacyleap analyzes the actual code paths, schemas, and integrations that define how APIs behave in production. This produces a dependency-aware view of APIs, consumers, and side effects, so interface changes are evaluated against reality, not intent.

- Second, it treats contracts as executable constraints, not static artifacts. During modernization, Legacyleap continuously verifies REST, Protobuf, and event schemas against real consumer behavior. Backward-compatibility rules are enforced automatically, payload drift is detected during parallel runs, and breaking changes surface early before traffic is shifted or deprecations are announced.

- Third, it turns validation into a first-class execution step. Instead of relying on manual audits or post-hoc testing, Legacyleap generates and runs contract-level validations as modernization progresses. This makes blast radius measurable, cutovers auditable, and rollback decisions data-driven rather than instinctive.

The result is a modernization process where progress is provable at every stage. Interface choices are informed by constraints, execution patterns are grounded in verified dependencies, and compatibility is validated continuously by the best of Gen AI and SMEs.

This is how Legacyleap enables safer, faster, and more predictable enterprise API modernization at scale, especially in environments where failure is not an option.

Closing: Execution Discipline Is What Determines Outcomes

By mid-2026, enterprise API modernization will have stopped being a design debate. REST, gRPC, GraphQL, and events are all proven patterns.

What separates successful programs from stalled ones is the ability to understand system behavior upfront, control change during rollout, and verify compatibility as systems evolve. Or as we like to call, execution discipline.

When modernization is treated as an execution problem, risk becomes measurable. Dependencies are visible, contracts are enforced, and cutovers are planned rather than improvised. When it isn’t, interface choices offer false confidence while breakage, rework, and delays surface later when they are most expensive to fix.

Platforms like Legacyleap exist to make enterprise API modernization measurable, verifiable, and repeatable, especially in environments where APIs sit at the center of revenue flows, partner integrations, and regulated systems.

If you’re planning API modernization across legacy REST, SOAP, gRPC, GraphQL, or event-driven systems, the next step isn’t choosing another framework. It’s validating whether your execution plan will hold under real-world constraints.

Book a demo to see how Legacyleap supports API modernization at system scale, or start with a $0 API modernization assessment to understand dependencies, compatibility risks, and realistic execution paths before changes reach production.

FAQs

A multi-protocol API strategy starts with consumer and dependency realities, not protocol preference. Most enterprises end up with REST for external stability, gRPC for internal efficiency, GraphQL for aggregation, and events for decoupling workflows. The strategy only works when contracts, versioning rules, and rollout mechanics are defined consistently across protocols. Otherwise, complexity compounds instead of reducing risk.

Governance should be protocol-agnostic and enforced at execution points, not defined separately for REST, gRPC, or GraphQL. This includes consistent authentication models, explicit versioning and deprecation rules, contract ownership, rate limits, and audit visibility. Effective governance emerges during modernization, when APIs are routed through shared gateways and execution paths become visible.

When third-party or partner systems are involved, backward compatibility becomes non-negotiable. Modernization typically relies on façade patterns, parallel runs, and extended deprecation timelines to avoid forcing external upgrades. Success depends on understanding which behaviors partners rely on and validating changes continuously before shifting traffic.

Yes, but only when modernization is executed incrementally. Parallel runs, strangler patterns, and contract-level validation allow teams to modernize APIs while feature work continues. Without these controls, modernization competes directly with delivery, creating pressure to cut corners and increasing breakage risk.

ROI is rarely justified through performance gains alone. Enterprises make the case through risk reduction: fewer outages, faster onboarding of consumers, lower cost of change, and predictable delivery timelines. Modernization programs that make dependencies, compatibility risk, and rollout impact measurable are far easier to justify at the executive level.