Intro: Agentic AI in Application Modernization

Most discussions around agentic AI focus on capability. In application modernization, capability is not the constraint. Execution is. Systems fail because modernization work does not scale cleanly across complex, interdependent codebases.

Agentic AI in application modernization changes that equation by shifting responsibility from assistance to execution. Agents take on bounded decision-making, operate directly on legacy systems, and carry work through planning, transformation, and validation under explicit controls. Modernization stops behaving like a sequence of heroic engineering efforts and starts behaving like a system.

This is not a theoretical construct. When agentic systems are built with deep system context, deterministic tooling, and governance by design, they can take ownership of real modernization workflows without eroding product integrity or architectural intent.

The sections that follow describe how agentic AI actually works in application modernization, based on its current implementation in production environments where real-world outcomes serve as the guiding principle.

What “Agentic” Means in Modernization

In modernization, agentic has a specific, operational meaning: agents do not just suggest changes; they take responsibility for executing bounded decisions under governance. This is the point where AI stops being an assistant and starts behaving like an execution system inside real codebases.

A copilot proposes options based on prompts, but an agent operates against structural and historical system context, plans the work, applies changes, validates outcomes, and escalates only when constraints are violated. The assumption that AI can only assist and never decide breaks down once autonomy is bounded, observable, and enforced through deterministic tooling.

The distinction shows up clearly in practice:

| Dimension | Copilot | Agentic system |

| Role | Assistant to an engineer | Autonomous collaborator accountable for scoped outcomes |

| Context | Prompt-level or file-level awareness | System-level context built from structural and historical models, combined with tool-driven analysis |

| Action | Suggests code or guidance | Executes changes through compilers, analyzers, and validation loops |

| Outcome | Human-owned execution and risk | Governed execution with traceability, validation, and defined escalation points |

This definition matters because it sets the ceiling for what modernization systems can safely do. Once agents operate with deep context, deterministic tools, and explicit boundaries, execution becomes predictable rather than probabilistic, and scale stops being a human bottleneck.

The Moment “Agentic AI” Stopped Being “AI Assistance”

There was a clear inflection point in how we approached modernization at Legacyleap. The system stopped behaving like an assistant the moment autonomous agents were connected to a meta-cognitive model that mapped code units, dependencies, data flows, and architectural relationships across the application.

For context, a Meta-Cognitive Model is a rich semantic code context graph that grounds domain- and tech-specific agents to enable deterministic, incremental Gen AI-driven modernization.

Before that, outputs were locally correct but globally fragile. After the connection, agents could reason about trade-offs, explain why a change was required, and trace its impact across features and services. Execution shifted from reactive suggestions to deliberate actions grounded in system-level context.

That change altered how modernization progressed. Work was sequenced based on dependency pressure rather than file order. Validation became continuous, anchored to reconstructed functional intent. Gaps surfaced earlier, and the system could explicitly signal where confidence was high and where human judgment was required.

This was the point where modernization stopped depending on individual expertise and started depending on the system itself. From there, agentic execution became inevitable because reasoning, memory, and tooling were operating as a single, governed layer rather than disconnected capabilities.

The Agentic Execution Model We Use at Legacyleap

Agentic execution works because modernization is decomposed into distinct responsibilities, not collapsed into a single “AI step.” The system operates as a coordinated set of agents, each accountable for a specific phase of the modernization lifecycle, sharing a common system model and operating under governance.

At a high level, execution is carried out by five agent roles:

- Assessment: establish system understanding

- Documentation: reconstruct functional and technical intent

- Recommendation: decide what to change, in what order

- Modernization: apply and iterate on code changes

- Validation & QA: enforce behavioral and architectural parity

These are not sequential scripts. They operate as collaborating roles against the same context, similar to how experienced teams divide work without fragmenting ownership.

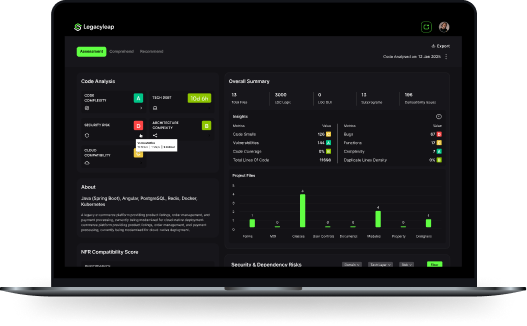

1) Build system context

(Assessment + Documentation)

Execution starts by grounding the system in reality.

- The Assessment role analyzes code structure, dependencies, data flows, and architectural patterns across repositories.

- A shared meta-cognitive model (MCM) is built to represent how the system behaves, not how it is described.

- The Documentation role reconstructs functional and technical specifications directly from this model, producing explainable documentation tied back to source evidence.

This step converts legacy complexity into a reusable system asset rather than a one-time discovery exercise.

2) Turn legacy into executable intent

(Documentation + Recommendation)

Modernization requires intent that can be enforced.

- Functional and technical specifications are translated into feature-level executable intent: user stories, tasks, constraints, and assumptions.

- The Recommendation role reasons over system dependencies and impact to determine what should be modernized, in what order, and under which constraints.

- Decisions are driven by system pressure and architectural fit, not ticket sequencing.

At this point, modernization has a verifiable target state, not just translated code.

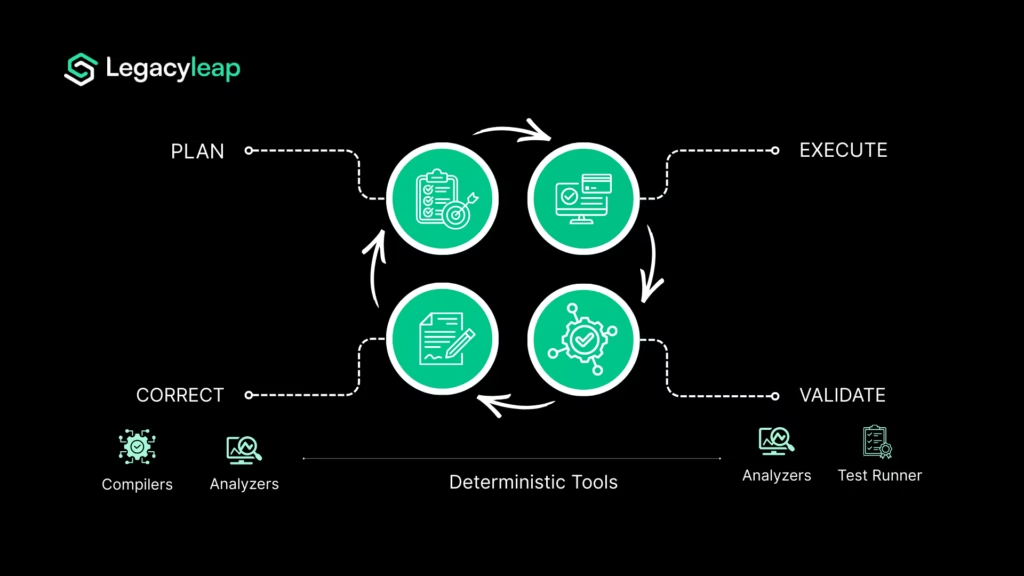

3) Execute changes with validation loops

(Modernization + Validation)

This is where agentic execution replaces manual orchestration.

- The Modernization role applies changes using a Plan → Execute → Validate → Self-correct loop.

- Deterministic MCP tools (compilers, analyzers, test harnesses) act as enforcement points rather than advisory signals.

- The Validation role continuously generates and runs unit, integration, and regression tests to confirm behavioral parity and surface drift early.

Gaps are identified, corrected, and revalidated until constraints are satisfied or escalation is required.

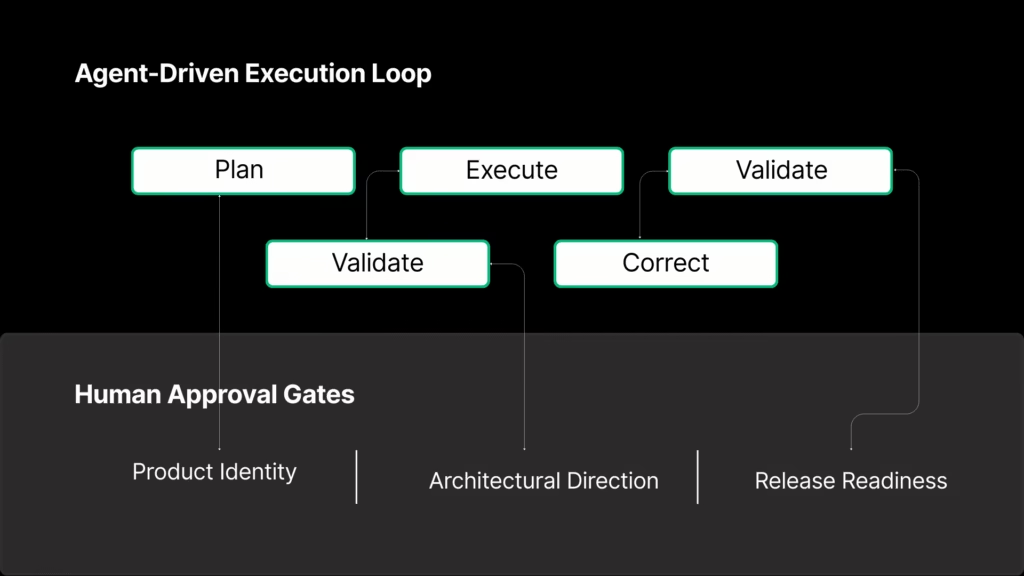

4) Ship under governance

(Governor + Human-in-the-loop)

Autonomy is bounded by accountability.

- Human approval is enforced where decisions affect product identity, architectural direction, or release readiness.

- The governing layer surfaces trade-offs explicitly and blocks progression when intent cannot be inferred safely.

- Release is treated as a control boundary, not the end of execution.

The result is execution that scales without becoming fragile, and automation that does not dilute ownership.

What The Agentic System Looks Like In Real Modernization Projects

The execution model only matters if it holds up under real constraints. The following workflows show how agentic AI for software modernization runs when the system is responsible for carrying work through to validated completion.

VB6 to .NET modernization

VB6 modernization starts by reconstructing intent, not by translating code.

- The system first reverse engineers functional and technical specifications directly from the legacy codebase. These are converted into feature-level executable specifications that define behavior, constraints, and acceptance conditions. This establishes a target state that can be validated continuously.

- A Gen AI-enabled VB6 transpiler then performs the initial transformation, bringing the application to roughly 70 percent completion. At this stage, the output compiles but is incomplete. Structural gaps, functional drift, and architectural mismatches remain.

- Agents generate a gap report that enumerates missing components, behavioral inconsistencies, and deviations from the reconstructed intent. Each gap is traceable to a specific feature or dependency. The system then iterates through those gaps, applying fixes, re-running validations, and correcting until constraints are met.

- Regression safety is enforced through autogenerated functional tests. Test coverage increases as gaps close, and builds converge toward a stable state. The workflow typically reaches approximately 90 percent completion with zero build errors, leaving only bounded refinements for human review.

VB6 is a strong candidate for agentic execution because it requires semantic understanding, feature-level reasoning, and continuous validation across tightly coupled legacy constructs.

Angular to React modernization

Angular to React modernization follows a similar pattern but operates at the UI and interaction layer.

Features are modernized incrementally, with agents preserving UX behavior and interaction contracts using MCP tools and functional tests. Work proceeds feature by feature, allowing validation to occur continuously rather than at the end.

Roughly 90 percent of the transformation is handled autonomously, with developers stepping in only for targeted refinements through the interactive terminal.

This workflow demonstrates that agentic execution is not limited to backend or legacy-heavy stacks. It applies wherever correctness depends on intent, parity, and validation rather than mechanical translation.

What The Agentic System Produces

Execution only earns trust when its outputs can be inspected. Agentic modernization produces artifacts that make intent, change, and risk visible at every stage, so teams are never asked to trust opaque behavior.

A. Specs and traceability

Modernization begins with a reconstructed intent that can be verified.

- Reverse-engineered functional and technical specifications derived directly from the codebase

- Feature-level user stories and tasks that reflect actual behavior

- Explicit assumptions recorded alongside links to the source evidence they were inferred from

These artifacts establish a traceable baseline that anchors all subsequent change.

B. Behavioral safety nets

Correctness is enforced continuously, not validated at the end.

- Executable functional tests written in Gherkin that define expected behavior

- Tests generated and refined as features are modernized, not after migration is complete

Behavior becomes an executable contract rather than a static document.

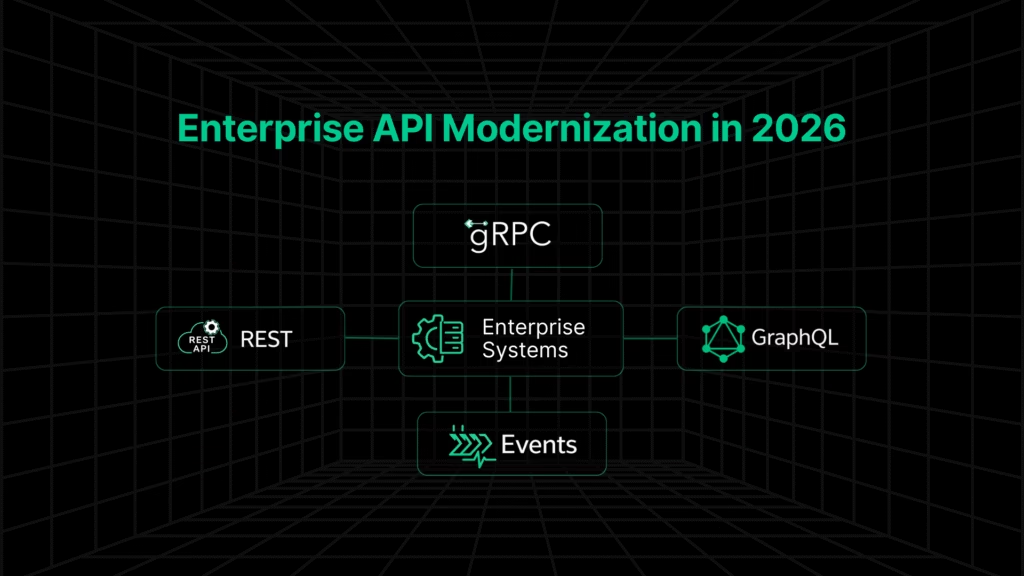

C. Architecture and change visibility

Structural impact is made explicit.

- Explainable functional and technical design artifacts with direct links to the underlying code

- API contracts expressed in their modern form

- Data flow diagrams that reflect actual runtime paths and dependencies

Architectural drift is surfaced early instead of being discovered during integration.

D. Engineering focus signals

The system highlights uncertainty instead of hiding it.

- TODO markers embedded in code where agent confidence is low or trade-offs exist

- A gap report that enumerates missing components, functional discrepancies, and technology or architecture drift

These signals direct human attention to the areas where judgment matters most.

Together, these outputs ensure modernization remains observable, auditable, and correctable. Nothing progresses on faith; everything progresses on evidence.

Where Autonomy Runs And Where Humans Must Decide

Autonomy is applied only where outcomes are bounded, observable, and reversible. When those conditions hold, agents can execute changes, validate results, and correct course without introducing systemic risk. This is how execution scales without eroding control.

Human approval is enforced where decisions carry lasting consequences. Any change that affects product identity, architectural direction, or production accountability is treated as a decision boundary and not an optimization opportunity. These boundaries are explicit and enforced through governance rather than convention.

The system applies three approval gates:

- Cross-feature UX and product coherence: Changes that alter user flows, interaction patterns, or feature semantics require human review to preserve product intent across the surface area.

- Architectural tradeoffs and future direction: Decisions involving service boundaries, data ownership, dependency contracts, or long-term platform direction are surfaced for human judgment.

- Final acceptance and release readiness: Promotion to production remains a human-in-the-loop decision, informed by validation evidence, gap reports, and risk signals.

This separation keeps execution fast where it can be automated, and deliberate where it must be owned. Governance is built into the flow, not layered on after the fact.

What Improves vs. Manual & Static Gen AI

When execution shifts from manual orchestration or static GenAI flows to agentic systems, the improvements show up in how work is completed, how teams engage, and how risk is managed.

- Completion: Modernization converges toward up to 90% autonomous completeness. Agents carry work through translation, correction, and validation loops instead of stopping at first-pass output.

- Effort: Developer effort moves from hands-on execution to command-driven oversight. Engineers trigger workflows and review outcomes while the multi-agent system plans, applies, validates, and iterates internally.

- Quality: Observed quality improves by roughly 20–30 percent compared to static Gen AI flows. Validation is continuous, intent is enforced through executable specifications, and regressions are caught earlier.

- Flexibility: Fine-tuning remains available through an interactive terminal. Engineers can interrogate agents, adjust constraints, and intervene at specific decision points without breaking the execution loop.

These gains are not the result of better prompts. They come from shifting responsibility for execution into a governed system that can reason, validate, and correct at scale.

Why Most Agentic Approaches Fail In Modernization

Most agentic approaches break down when they are applied to real modernization work rather than controlled demos. The failure modes are consistent and predictable.

- Shallow context leads to missed functionality and drift: Agents operating with file-level or prompt-level context make locally correct changes that introduce global inconsistencies. Functional gaps and architectural drift accumulate quietly until they surface late in validation.

- Non-deterministic tooling produces fragile outputs: When execution relies on probabilistic generation without deterministic enforcement, results vary across runs. Builds pass intermittently, fixes regress earlier changes, and confidence degrades instead of compounding.

- Lack of system-level memory prevents reasoning: Agents without a persistent system model skim large codebases. They react to fragments rather than reasoning over dependencies, intent, and long-range impact.

These failures set a clear minimum bar for agentic execution in modernization.

- System context must be multi-repository and system-level, not isolated to individual files. In practice, this requires a persistent meta-cognitive model built over millions of lines of code that captures structure, dependencies, and behavior.

- Execution must be anchored to deterministic tooling. Compilers, analyzers, and test harnesses serve as enforcement points, not advisory signals.

- Architecture fidelity must be enforced, not inferred. Changes that violate structural constraints must be blocked or escalated.

- Validation must be driven by tools and evidence, not manual spot checks.

The difference shows up clearly when comparing generic agent setups to systems built for modernization at scale.

| Dimension | Generic agents | Legacyleap agents |

| Code context | File-level or single-repo | Multi-repo, system-level context (~8M LoC) captured in a meta-cognitive model |

| Functional awareness | Partial and local | End-to-end across features and dependencies |

| Determinism | Low, generation-driven | High, enforced through MCP tooling |

| Architecture fidelity | Best-effort | Explicitly enforced |

| Validation | Largely manual | MCP-driven and continuous |

| Enterprise readiness | Limited | Production-grade |

Agentic AI succeeds in modernization only when these conditions are met. Anything less shifts complexity rather than removing it, and teams pay for that gap later in integration and production.

Wrapping Up,

Agentic AI works in modernization when execution is grounded in complete system context, enforced through deterministic tooling, and constrained by governance. Without those conditions, autonomy amplifies risk instead of removing friction.

In this model, humans retain ownership of intent, architectural direction, and release accountability. Those decisions define the boundaries. Within them, agents take responsibility for executive tasks such as planning changes, applying them, validating outcomes, and correcting drift until constraints are satisfied or escalation is required.

This division of responsibility matters. It preserves product identity and long-term architecture while allowing modernization work to move at system speed rather than human throughput. Execution becomes consistent, repeatable, and inspectable instead of being dependent on individual effort.

The result is collaborative autonomy: humans decide what must hold, agents execute how it gets done, and governance ensures the two never drift apart.

If you want to see how this approach applies to your own system, book a demo and let us walk you through what agentic execution looks like against a real codebase.

FAQs

Agentic systems do not rely on existing documentation as a primary input. Instead, they reconstruct functional and technical intent directly from the codebase, dependency structure, and observed behavior. Documentation, when present, is treated as a secondary signal and is validated against actual system behavior rather than assumed to be correct.

Agentic execution supports incremental modernization. Because agents operate at feature and dependency boundaries, changes can be scoped, validated, and released in stages without requiring a full system rewrite. Incrementality is enforced through validation loops and governance gates rather than manual coordination.

Agentic systems integrate with existing CI/CD pipelines by using them as enforcement points rather than replacement mechanisms. Build, test, and release stages remain intact, while agent-driven execution feeds validated changes into those pipelines with traceability and evidence attached.

When system context is insufficient or signals conflict, agents surface the ambiguity explicitly rather than inferring intent. These situations are flagged through confidence markers and escalation points, allowing human reviewers to resolve intent before execution proceeds.

Scaling across portfolios depends on persistence of system context. Agentic systems reuse accumulated understanding of architectural patterns, dependency models, and validation rules across applications, reducing repeated discovery effort and allowing execution quality to improve over time rather than reset per project.

Consistency is maintained through a shared system-level context that spans repositories. A persistent meta-cognitive model captures dependencies and behavior across services, allowing agents to reason beyond local changes. Because all agents operate against the same context, cross-service changes remain aligned and regressions surface early.