When PHP or Perl Systems Are Good Candidates for Python Microservices Modernization

Not every PHP or Perl system is structurally suited for Python microservices modernization. The differentiator isn’t code quality or language age, but whether the system can support phased extraction, controlled rollout, and measurable behavioral validation.

The right candidates for PHP or Perl to Python microservices modernization share a small set of execution-level characteristics that make modernization predictable rather than speculative.

Strong candidates typically exhibit:

- Low runtime coupling: limited shared database tables, minimal global state, and no cross-request session dependence, allowing services to be isolated without reworking the entire persistence layer.

- High change frequency with contained blast radius: components that evolve often but affect a narrow set of workflows, such as integration APIs, batch jobs, schedulers, or automation logic.

- Business-critical logic with manageable operational risk: functionality that matters to revenue or compliance, yet can be introduced behind controlled routing, async boundaries, or staged rollout.

- Observable behavior: reliable logs, deterministic outputs, event trails, or historical datasets that support side-by-side comparison during parallel runs.

Poor candidates, at least initially, include:

- Deeply stateful cores with bidirectional database coupling.

- Systems where behavior is implicit, undocumented, and lacks test or telemetry signals.

- Modules whose failure modes cannot be isolated or rolled back without full-system impact.

Python is commonly chosen because its service frameworks, background processing ecosystem, and operational tooling align well with phased extraction, automation, and batch-oriented workloads during modernization. The choice is pragmatic and execution-driven, not a referendum on language superiority.

Answering which PHP or Perl systems are ready to move, and which must wait, is the gating decision for Python microservices modernization. That decision depends on system-level visibility into dependencies, data flows, and runtime behavior, an area where platforms like Legacyleap focus before transformation risk compounds.

What to Rebuild First in Python During PHP/Perl Microservices Modernization

Once a PHP or Perl system is deemed structurally fit, the next failure point is sequencing. Most PHP monolith to Python microservices efforts collapse because teams extract the wrong things first and inherit more coupling than they remove.

Effective modernization starts by targeting components that deliver architectural relief without destabilizing core behavior.

Start with components that are naturally isolatable

These elements tend to have clearer boundaries, fewer bidirectional dependencies, and measurable outputs, making them suitable for early Python service extraction.

- APIs and integration layers: External-facing APIs, partner integrations, and internal service adapters are often the cleanest entry point. They already act as contracts, can be fronted by routing layers, and allow traffic shadowing or partial cutover without touching core transaction flows.

- Background jobs, schedulers, and batch pipelines: Cron-driven workflows, ETL-style jobs, reporting pipelines, and async processors are typically less stateful and easier to validate through input/output comparison. These are high-ROI candidates for Python due to ecosystem maturity around scheduling, orchestration, and data processing.

- Automation and rule engines: Pricing rules, eligibility checks, notification logic, and workflow automation often evolve rapidly and benefit from Python’s expressiveness without requiring immediate refactoring of transactional cores.

Defer components that amplify risk early

Some parts of the system are better left untouched until service boundaries, deployment controls, and parity validation are proven.

- Core transactional logic: Order processing, billing, settlement, or ledger-style workflows often combine deep state, implicit assumptions, and tight DB coupling. Extracting these too early multiplies the blast radius.

- Heavily stateful workflows: Long-lived sessions, synchronous multi-step flows, and logic embedded directly in database transactions are poor first candidates for microservice extraction.

Low-coupling vs high-coupling signals

| Component Type | Coupling Characteristics | Early Python Candidate |

| API façade/integration service | Clear inputs/outputs, limited DB writes | High |

| Batch job/scheduled task | Deterministic runs, replayable inputs | High |

| Rules engine | Logic-centric, minimal shared state | High |

| Transactional core | Shared tables, bidirectional dependencies | Low |

| Stateful workflows | Session-heavy, implicit sequencing | Low |

The goal is not to “break the monolith,” but to establish safe seams where Python services can run in parallel, be validated independently, and fail without cascading impact.

This is where microservices stop being an architectural slogan and become an execution discipline. Correct sequencing depends on understanding dependency graphs, data ownership, and downstream impact, which is why teams that rely solely on repository-level analysis often misjudge what is safe to extract first.

Executing Incremental Migration Safely in PHP/Perl to Python Modernization

Once components are sequenced correctly, the next constraint is how they are introduced into production without destabilizing live systems. Incremental migration patterns (most commonly strangler-style replacement and parallel run) are widely referenced, but their operational consequences are rarely spelled out.

Strangler-style replacement in real environments

In practice, strangling a PHP or Perl monolith is less about routing requests and more about owning behavior boundaries:

- Traffic routing must be explicit: which requests are served by legacy code, which by Python services, and under what conditions.

- Data ownership must be defined early. Shared tables and dual-write scenarios introduce consistency risks that compound over time.

- Synchronization strategy matters: eventual consistency may be acceptable for integrations or reporting, but not for transactional paths.

Without these guardrails, strangler patterns often devolve into long-lived hybrids that are harder to reason about than the original system.

Parallel run realities

Running legacy and Python services side by side is the safest way to validate change, but it introduces real operational overhead:

- Dual writes: Maintaining correctness across two execution paths requires clear authority over writes and conflict resolution.

- Traffic shadowing: Mirroring production traffic into Python services exposes edge cases early, but demands careful isolation to avoid side effects.

- Partial cutovers: Gradual shifts in traffic require monitoring not just latency and errors, but behavioral divergence across versions.

Parallel run is not a toggle; it is a sustained operating mode that needs discipline to avoid drift.

Hybrid states

The most underestimated risk in PHP/Perl to Python microservices modernization is prolonged hybridity:

- Debugging spans multiple runtimes and data models.

- Operational ownership blurs across teams.

- Temporary migration scaffolding becomes permanent.

These costs don’t appear in architecture diagrams, but they surface quickly in incident response and delivery velocity.

This phase is where many modernization efforts slow down because manual governance of routing, data flow, and parity does not scale. Managing incremental replacement safely requires consistent visibility into dependencies, runtime behavior, and downstream impact.

Validating Functional Parity Between PHP/Perl and Python Services

Functional parity is not a vague assurance that “things still work.” In PHP/Perl to Python microservices modernization, parity is a verifiable contract: the new services must produce the same externally observable behavior as the legacy system under equivalent conditions.

What functional parity means in practice

Parity must be evaluated across behavioral outcomes, not just code paths:

- Outputs: API responses, payload structure, status codes, and error semantics.

- Side effects: database writes, state transitions, cache updates, and downstream calls.

- Data mutations: record creation, updates, deletes, and derived field calculations.

- Events: messages emitted to queues, topics, or external systems.

If any of these diverge without intent, confidence in the migration erodes quickly.

What to compare during parallel execution

Effective parity validation focuses on concrete comparison points:

- API responses: payload diffs, response ordering, headers, and error behavior under identical inputs.

- Database state diffs: before/after snapshots, row-level changes, and constraint enforcement.

- Message and event streams: emitted events, payload schemas, ordering, and delivery guarantees.

The comparison must be systematic and repeatable.

Handling real-world tolerances

Perfect byte-level equivalence is rarely realistic. Parity frameworks must account for controlled tolerances:

- Ordering: especially in async processing and batch jobs.

- Timestamps: creation times, processing times, and clock skew.

- Floating-point and encoding differences: numeric precision, locale, and string normalization.

These tolerances need to be defined explicitly; otherwise, teams end up debating false positives instead of validating behavior.

Why manual testing breaks down

Manual test cases and spot checks don’t scale when:

- Execution paths multiply across services.

- Parallel runs persist for weeks or months.

- Edge cases only surface under production traffic.

At that point, parity validation becomes an engineering system. It requires automated test generation, behavior inference from legacy execution, and repeatable validation loops that can run continuously as traffic shifts between PHP/Perl and Python services.

This is also where modernization programs either regain momentum or stall entirely. Sustained parity assurance depends on having consistent visibility into behavior across both runtimes.

Operating Python Microservices During and After PHP/Perl Modernization

Getting Python services into production is not the finish line. It’s where modernization risk concentrates.

Many PHP/Perl to Python microservices initiatives technically succeed in code conversion but fail operationally because runtime standards, deployment controls, and rollback discipline aren’t designed for hybrid operation.

Packaging and runtime standards

During migration, Python services must be packaged in a way that supports repeatability and isolation:

- Containerized runtimes with pinned dependencies to avoid environment drift between legacy and modern paths.

- Explicit configuration externalization so behavior can be controlled without redeploying services.

- Resource limits and timeouts tuned for mixed workloads where Python services coexist with PHP/Perl execution.

These standards are about making parallel operation predictable.

CI/CD as a migration safety mechanism

In modernization contexts, CI/CD exists to prevent regression, not just accelerate delivery:

- Automated builds ensure that every extracted service is reproducible.

- Validation gates tie deployments to parity checks and behavioral comparisons.

- Promotion rules control when traffic can shift from legacy paths to Python services.

Without these controls, partial cutovers become irreversible experiments.

Deployment strategies that reduce blast radius

Incremental migration demands deployment patterns that assume failure is possible and survivable:

- Canary deployments to expose Python services to real traffic without full commitment.

- Blue-green deployments to enable rapid fallback when parity deviations appear.

- Rollback readiness baked into pipelines, not treated as an afterthought.

The goal is not zero incidents, but bounded impact when issues surface.

Security and environment isolation in hybrid states

During parallel runs, security posture often degrades if boundaries aren’t explicit:

- Network segmentation between legacy and Python services.

- Clear identity and access rules for cross-runtime calls.

- Secrets and credentials scoped per service, not shared across the hybrid stack.

Hybrid operation is temporary, but security gaps introduced during migration tend to persist.

Operating Python microservices safely during and after migration requires deployment artifacts, validation hooks, and environment controls that reflect modernization realities.

Where Legacyleap Fits in Python Microservices Modernization

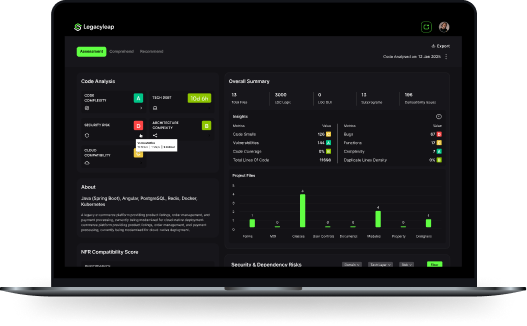

Up to this point, the discussion has focused on what needs to happen for PHP/Perl to Python microservices modernization to work safely. The remaining question is how those steps are executed consistently at enterprise scale, without turning modernization into a prolonged, manual exercise.

This is where Legacyleap fits as a system-level modernization platform that supports the full execution path.

System-level comprehension before change

Legacyleap starts with deep comprehension of existing PHP and Perl systems:

- Parses entire codebases to map dependencies, call graphs, and data flows.

- Surfaces the implicit business logic and runtime behavior that is not documented.

- Builds a system-wide view that reflects how the application actually executes, not how it is organized in repositories.

This level of understanding is what enables accurate candidate selection and sequencing decisions discussed earlier.

Candidate identification and sequencing

Based on that comprehension layer, Legacyleap helps teams:

- Identify low-coupling components suitable for early Python extraction.

- Assess downstream impact before changes are introduced.

- Sequence rebuilds to reduce blast radius rather than redistribute risk.

This replaces intuition-driven sequencing with evidence tied to real dependencies and behavior.

Safe transformation and service extraction

For components selected for modernization, Legacyleap supports:

- Controlled transformation of PHP/Perl logic into Python services.

- Preservation of functional intent during extraction.

- Integration with existing delivery workflows, whether execution is handled by internal teams or external partners.

The focus is on correctness and traceability, not speed alone.

Automated parity validation

Legacyleap operationalizes functional parity by:

- Inferring expected behavior from legacy execution paths.

- Generating automated tests and comparison hooks.

- Running repeatable validation loops during parallel runs and partial cutovers.

This is what allows confidence to scale as traffic gradually shifts to Python services.

Deployment-ready outputs

Modernization is only complete when services are operable. Legacyleap generates:

- Deployment artifacts aligned with containerized, CI/CD-driven environments.

- Validation hooks that integrate with pipelines and release gates.

- Outputs designed for hybrid operation, not greenfield assumptions.

How this differs from alternatives

Legacyleap is distinct from:

- Copilots and IDE plugins, which optimize individual developer productivity but lack system-wide awareness.

- Manual SI-led migrations, which rely heavily on bespoke analysis and testing, make outcomes difficult to repeat or govern.

Instead, Legacyleap acts as a Python microservices modernization platform, applying Gen AI and Agentic workflows within a structured, verifiable process designed for enterprise systems.

Across assessment, sequencing, transformation, validation, and deployment, Legacyleap consolidates what is otherwise fragmented across tools, spreadsheets, and tribal knowledge, allowing modernization programs to move forward with measurable confidence rather than assumption-driven progress.

A Structured Path from Legacy PHP/Perl to Python Microservices

Modernizing PHP or Perl systems into Python microservices is an execution problem. The difference between progress and stall comes down to sequencing the right components, migrating incrementally, validating parity under real traffic, and operating safely in hybrid states.

When those controls are explicit, modernization becomes predictable. When they’re assumed, risk compounds.

The path outlined here is intentionally practical:

- Select candidates based on structure and observability, not intent.

- Rebuild isolatable components first to reduce blast radius.

- Use incremental replacement and parallel run with clear ownership.

- Treat functional parity as a verifiable contract.

- Operate Python services with deployment discipline designed for migration, not greenfield builds.

This is the execution layer where platforms like Legacyleap are applied to replace manual analysis and ad-hoc testing with system-level comprehension, automated parity validation, and deployment-ready outputs tailored for PHP/Perl to Python transitions.

If you’re evaluating Python microservices modernization for a live PHP or Perl system, the fastest way to reduce uncertainty is to see how your application behaves under these controls.

- Book a demo to walk through how your PHP/Perl codebase can be assessed, sequenced, and validated for Python service extraction.

- Request a $0 assessment focused specifically on identifying safe Python rebuild candidates, parity signals, and incremental cutover paths for your system.

The goal isn’t to move faster at all costs but to modernize with predictability, safety, and proof at every step.

FAQs

Python microservices architecture is widely adopted in large US enterprises, but success depends less on Python itself and more on how services are sequenced, validated, and operated during migration. Enterprises that treat Python as a drop-in rewrite often struggle; those that apply incremental extraction, parity validation, and controlled rollout see predictable outcomes even at scale.

The primary risks are behavioral drift, unclear data ownership, and operational complexity. Parallel systems introduce dual execution paths, which can diverge silently without continuous parity validation. Over time, hybrid states can also blur ownership boundaries and increase incident resolution time if governance is manual or inconsistent.

Transactional cores should not be migrated first when they rely on shared databases, implicit state, or tightly coupled workflows. Extracting them early increases blast radius and makes parity validation significantly harder. In most enterprise systems, transactional logic is safer to modernize only after integration layers, background jobs, and automation paths have been stabilized in Python.

Functional parity is validated by comparing externally observable behavior across legacy and Python services (API outputs, data mutations, emitted events, and side effects) under equivalent conditions. Effective validation requires automated comparisons, defined tolerances, and repeatable execution during parallel runs. Unit and integration tests alone are insufficient because they don’t protect downstream consumers.

Unlike manual or SI-led migrations that rely on static analysis and ad-hoc testing, Legacyleap applies system-level comprehension, dependency mapping, and automated parity validation throughout the modernization lifecycle. This allows teams to sequence Python service extraction safely, validate behavior continuously during parallel runs, and generate deployment-ready outputs without relying on tribal knowledge or one-off scripts.