Introduction: Why This List Exists

Over the last several years, I’ve spent a lot of time evaluating application modernization platforms and providers in the USA, sometimes as a buyer, sometimes as a partner, often as the person responsible for outcomes.

The landscape looks crowded on the surface. Among the many platforms, tools, accelerators, and services, everyone claims to have cracked modernization.

What becomes obvious only after you’re deep into delivery is how fragmented that landscape really is. Most offerings are built to operate in isolation. Some are strong at understanding legacy systems. Some are good at producing new code. Others focus on migration, orchestration, or scale. Very few are designed to carry responsibility from the initial assessment of a legacy system through to a deployable, validated modern application.

After enough evaluations and real-world projects, a pattern emerged. Teams weren’t failing because they chose the “wrong” vendor. They were inheriting a model where stitching together partial solutions was considered acceptable. Over time, that assumption became harder to justify.

This list reflects how the current application modernization market actually breaks down, what different categories of providers are genuinely suited for, and where the limitations start to show when modernization is treated as a lifecycle rather than a set of disconnected tasks.

Why “Application Modernization Platforms” Are Often Confusing

Spend enough time evaluating application modernization platforms and providers, and a pattern starts to stand out. The word platform is being applied to almost everything. Tools, accelerators, frameworks, internal utilities, even delivery methodologies. On paper, it looks like abundance. In practice, it creates confusion.

Point tools that operate at a single layer are introduced as platforms. Internal accelerators, often little more than prompt orchestration and scaffolding, are positioned as enterprise-grade solutions. LLM wrappers are rebranded as modernization engines. Services methodologies are packaged, named, and sold as if the method itself were a product.

None of this is deceptive by intent. It’s a natural outcome of a market trying to move faster than its foundations allow.

The issue isn’t capability. Many of these offerings are genuinely strong at what they’re designed to do. The issue is scope. Most of what’s marketed as a platform is optimized for speed within a narrow slice of the modernization journey.

Assessment here. Code generation there. Migration somewhere else. The responsibility for stitching those pieces together conceptually, technically, and operationally still sits with the enterprise.

That distinction matters. Because speed in isolated phases is not the same thing as accountability across outcomes.

Code generation does not equate to modernization. Acceleration does not imply lifecycle ownership. The services scale does not guarantee determinism.

When these distinctions get blurred, teams don’t notice immediately. The friction shows up later during validation, during rollout, and during integration with systems that were never part of the original demo. By then, the “platform” conversation has already moved on, and the cost of those gaps is absorbed quietly by engineering teams.

This is the context in which most application modernization decisions are being made today, and it’s why the category needs a clearer frame before any meaningful comparison can happen.

What an Application Modernization Platform Must Handle End-to-End

Before comparing application modernization platforms and providers, the category needs a boundary. Without one, everything collapses into positioning language with tools, accelerators, and services all discussed as if they’re interchangeable.

A modernization platform isn’t defined by how many things it claims to touch. It’s defined by what it can own, end to end, without pushing responsibility back to the enterprise at critical moments.

That ownership can be tested. And when it is, the distinction between platforms, tools, and accelerators becomes fairly unambiguous.

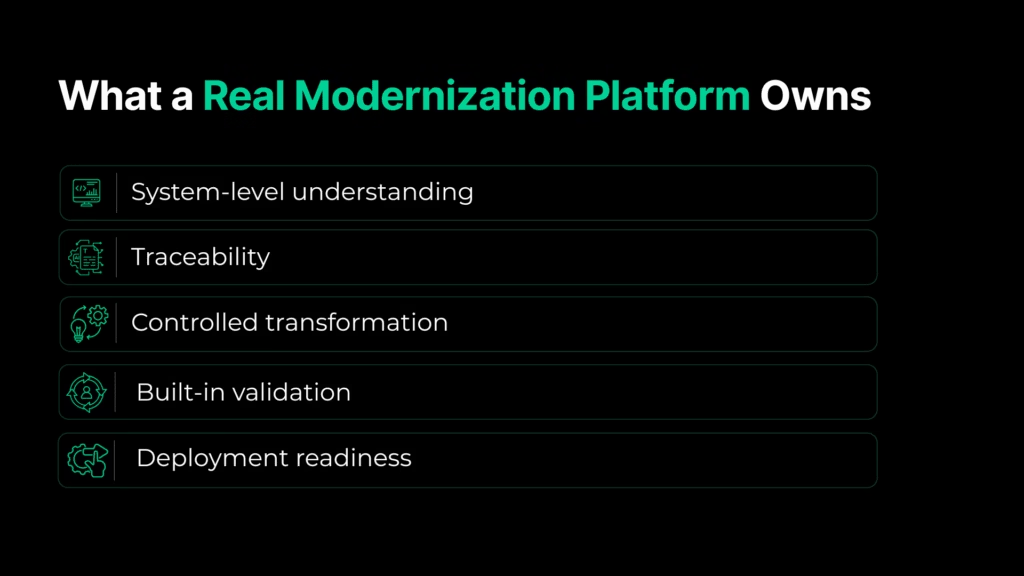

System-level understanding is non-negotiable

A real platform must be able to represent an application as a system, not as a loose collection of files or repositories. That includes dependencies, runtime behavior, data access patterns, and implicit coupling, because those are what actually make legacy systems hard to change.

If a solution only operates at the file or repo level, every downstream decision is an approximation. That may be acceptable for isolated refactoring. It is not sufficient for modernization at scale.

Traceability is what preserves trust

Modernization replaces behavior, not just syntax. A platform must preserve traceability from legacy behavior to modern implementation. What changed, what stayed the same, and why.

Without that lineage:

- Validation becomes subjective

- Reviews become opinion-driven

- Confidence erodes long before production

Traceability is what allows teams to reason about correctness instead of hoping for it.

Transformation must be controlled, not improvised

Speed alone doesn’t reduce risk. Structure does. A modernization platform must support controlled, reviewable transformation steps with deterministic outcomes.

If transformation is primarily prompt-driven, with correctness inferred after the fact, the system is not under control. Human review becomes the only guardrail, and variability becomes the norm.

Validation cannot be external

Functional parity, contract alignment, and test coverage are not optional phases that can be deferred or “handled by QA later.” When validation lives outside the platform, risk is simply pushed downstream.

Platforms that accelerate transformation but externalize validation tend to look fast early and expensive late. The cost shows up during rollout, when assumptions finally collide with reality.

Deployment readiness is part of the job

Modernization that cannot reach production safely is incomplete by definition. A platform must generate outputs that are deployment-aware, environment-aware, and reversible.

That includes:

- Production-aligned artifacts

- Awareness of deployment constraints

- Rollback paths when assumptions fail

Anything less leaves the hardest part of modernization unresolved.

The gating rule

This isn’t a maturity model or a roadmap aspiration. It’s a binary filter.

If any of the above elements are missing, you don’t have a modernization platform, but a tool, an accelerator, or a delivery aid.

All of those can be valuable. None of them should be evaluated as end-to-end platforms.

This definition is the lens used for everything that follows.

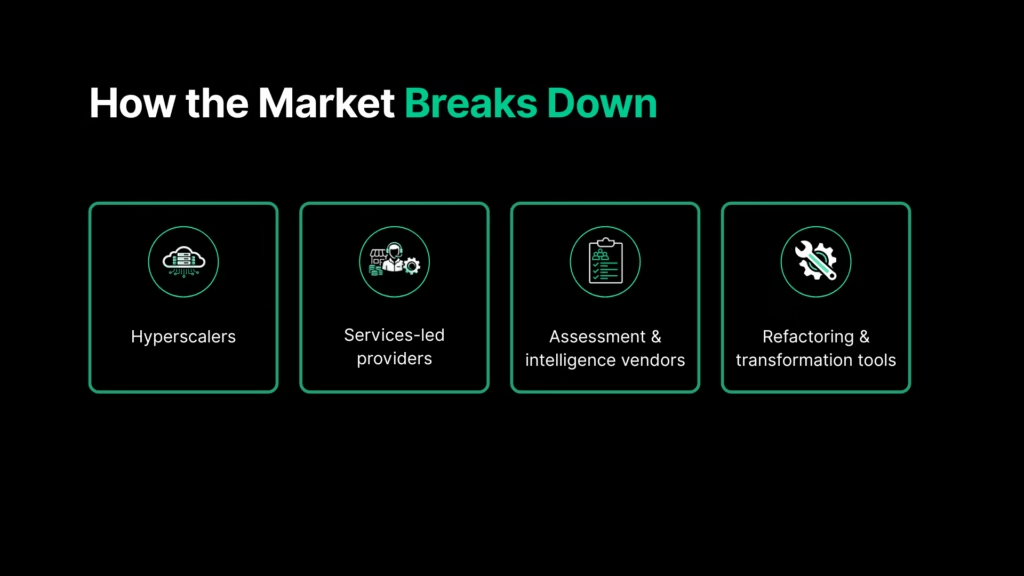

How the Application Modernization Market Actually Breaks Down

Once you stop evaluating application modernization platforms and providers based on demos and positioning, and instead apply a lifecycle lens (which we’ll talk about soon), the market reorganizes itself very quickly. Categories that once looked competitive start to look orthogonal. Strengths become clearer. So do the gaps.

This isn’t about intent or capability. It’s about where ownership begins and ends.

1. Hyperscalers

Hyperscalers play a foundational role in most modernization programs. They enable infrastructure, runtime environments, managed services, and deployment primitives that modern systems are built on. Their tooling can assist with migration, rehosting, containerization, and operational readiness at scale.

What they do not own is the legacy system itself. They don’t take responsibility for deep system comprehension, behavioral equivalence, or transformation correctness. The assumption is that enterprises or partners will bridge that gap before workloads arrive. Hyperscalers enable modernization, but they don’t carry it end-to-end.

That distinction matters when expectations are set incorrectly.

2. Services-led providers

Large service providers bring execution capacity, delivery discipline, and domain experience. For many organizations, they are the only viable way to move large application estates forward. At scale, services matter.

The limitation is consistency. Most services-led modernization relies on internal accelerators and methodologies that live inside teams, not systems. Outcomes vary based on who’s staffed, how decisions are documented, and how rigorously validation is enforced. Accelerators help, but they are rarely portable, auditable, or repeatable outside the context they were built for.

When the method lives in people rather than platforms, results become team-dependent by design.

3. Assessment and intelligence vendors

Assessment and discovery tools bring clarity early. They inventory estates, surface dependencies, identify technical debt, and support prioritization. Used correctly, they raise the quality of modernization decisions significantly.

Where they stop is where execution begins. These tools do not own transformation, validation, or deployment. They inform decisions, but they don’t carry responsibility once those decisions are acted on. That makes them valuable inputs, but they are not modernization platforms.

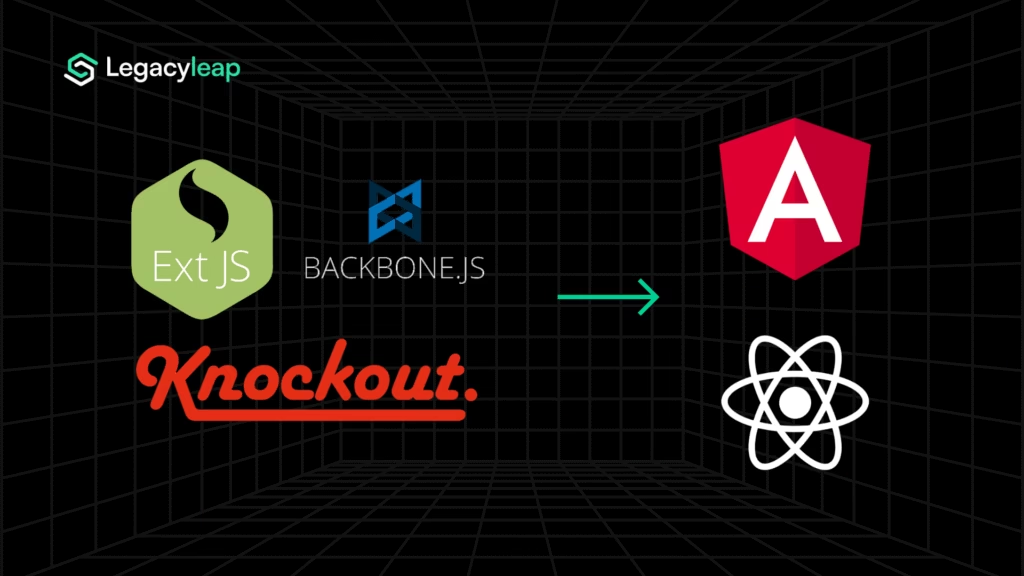

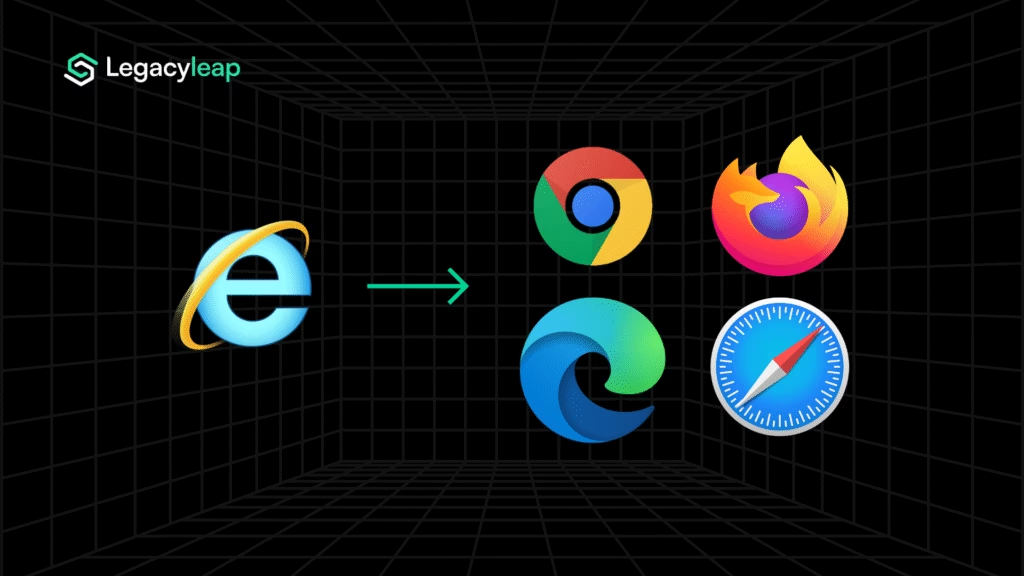

4. Refactoring and transformation tools

Refactoring, upgrade, and transformation tools are often excellent at what they’re built for. They automate specific transitions, reduce manual effort, and compress timelines for well-defined changes.

Their limitation is scope. They operate in isolation at the level of code, framework, or dependency. They don’t maintain system-wide context, behavioral traceability, or downstream validation. Used deliberately, they can accelerate parts of a modernization effort. Used as platforms, they expose teams to risks they don’t see until late in delivery.

Powerful tools don’t become platforms simply by being fast.

5. Accelerators and LLM-based platform theater

This is where the category becomes noisy.

A growing number of offerings position themselves as modernization platforms while operating primarily as LLM-driven accelerators or internal workflow wrappers. The pattern is consistent: impressive demos, rapid output, and minimal visibility into what actually changed or why.

The red flags are usually easy to spot:

- No intermediate representation of the system

- No traceability from legacy behavior to modern output

- No first-class validation artifacts

- “Human review” positioned as the primary safety mechanism

- Demos optimized for speed, not scrutiny

These solutions may accelerate activity, but they don’t reduce responsibility. When correctness, validation, and rollout are pushed back to engineering teams, the platform claim doesn’t hold up under real delivery pressure.

This reclassification isn’t meant to dismiss entire categories. Each plays a role that they are purpose-built for. The problem starts when boundaries blur, and partial solutions are evaluated as if they were complete.

With that clarity in place, it becomes much easier to read any “top platforms” list and to understand what each entry can realistically be trusted to do.

A Quick Note on How to Interpret This List

Before getting into specific application modernization platforms and providers in the USA, a clarification is necessary.

This list is not an endorsement or an attempt at ranking. It reflects visibility, adoption, and relevance in real enterprise modernization conversations, and not a judgment on quality or intent.

The categories used here are not value statements. Being grouped under a particular role does not make a provider weak or incomplete by default. It simply reflects the part of the modernization problem they are structurally designed to address. Many organizations rely on multiple categories at once, often successfully.

The goal of this list is clarity, not comparison. It’s meant to make it easier to understand what each provider is realistically suited for, and what responsibility still sits with the enterprise once the engagement begins.

Top Application Modernization Platforms & Providers in the USA

Below is a grouped view of prominent application modernization platforms and providers operating in the US market today. Each entry focuses on what the provider actually delivers in practice, along with the scenarios where they tend to be the best fit.

Hyperscalers

1. Amazon Web Services (AWS)

What they do in application modernization:

AWS provides cloud infrastructure, managed services, and modernization tooling to support application migration, replatforming, and cloud-native operation. Its application modernization capabilities focus on enabling workloads to run effectively on AWS environments rather than owning legacy system comprehension, transformation logic, or validation end-to-end.

Where they typically fit in modernization programs:

Cloud migration, infrastructure enablement, and runtime operations.

Best fit when:

Modernization is driven by cloud adoption, with transformation, validation, and rollout governance handled by partners or internal teams.

2. Microsoft Azure

What they do in application modernization:

Microsoft Azure offers migration tooling, platform services, and development frameworks to move and operate applications within the Azure ecosystem. Its modernization approach emphasizes cloud readiness and platform integration, particularly for Microsoft-aligned technology stacks.

Where they typically fit in modernization programs:

Cloud migration, platform standardization, and managed application hosting.

Best fit when:

Enterprises are heavily invested in Microsoft technologies and want to modernize applications alongside Azure-native services.

3. Google Cloud Platform (GCP)

What they do in application modernization:

Google Cloud Platform focuses on container-first modernization, Kubernetes-based runtime models, and cloud-native application operations. Its offerings support replatforming and modernization patterns centered on containers and distributed systems.

Where they typically fit in modernization programs:

Containerization, Kubernetes adoption, and cloud-native runtime enablement.

Best fit when:

Modernization initiatives prioritize container-based architectures and Kubernetes-led operating models.

4. AWS Transform

What they do in application modernization:

AWS Transform is a workload-scoped modernization service designed to assist with specific transformation motions, such as VMware-based workloads and cross-platform .NET modernization for cloud environments. It operates within the AWS ecosystem and targets defined transformation use cases rather than estate-wide modernization ownership.

Where they typically fit in modernization programs:

Scoped workload transformation and cloud-aligned modernization initiatives.

Best fit when:

Organizations need targeted modernization support for specific workloads as part of a broader AWS-centric strategy.

5. Azure Copilot

What they do in application modernization:

Azure Copilot is an AI assistant embedded within the Azure platform to help teams design, manage, and operate applications and infrastructure. It supports modernization indirectly by improving how modern workloads are configured, optimized, and maintained in Azure environments.

Where they typically fit in modernization programs:

Cloud design assistance, operational optimization, and platform-level guidance.

Best fit when:

Teams are already running or migrating applications on Azure and want AI-assisted support for managing modern cloud environments.

Services-Led Modernization Providers

6. IBM Consulting

What they do in application modernization:

IBM Consulting delivers large-scale application modernization programs that combine consulting, engineering execution, and proprietary tooling. Its approach emphasizes modernization across complex enterprise estates, often alongside broader transformation initiatives involving cloud, data, and AI.

Where they typically fit in modernization programs:

End-to-end delivery execution across assessment, transformation, and rollout, supported by services teams and internal accelerators.

Best fit when:

Organizations need modernization at scale with strong governance, domain depth, and the ability to manage long-running, multi-system initiatives.

7. Cognizant

What they do in application modernization:

Cognizant provides application modernization services spanning cloud migration, refactoring, and digital engineering, supported by internal frameworks and accelerators. Modernization outcomes are driven primarily through service execution rather than platform-led automation.

Where they typically fit in modernization programs:

Large portfolio modernization efforts requiring delivery capacity, process discipline, and distributed execution teams.

Best fit when:

Enterprises require scale and consistency across multiple applications, with modernization led through service delivery models.

8. Kyndryl

What they do in application modernization:

Kyndryl focuses on modernizing and operating complex application estates in hybrid and managed infrastructure environments. Its modernization work is closely tied to operational continuity, infrastructure transformation, and long-term systems management.

Where they typically fit in modernization programs:

Modernization initiatives that must coexist with live operations and legacy infrastructure constraints.

Best fit when:

Organizations need to modernize applications without disrupting mission-critical systems or ongoing operational responsibilities.

9. EPAM Systems

What they do in application modernization:

EPAM Systems delivers application modernization through high-end software engineering and digital platform development. Its work often involves deep architectural refactoring and product-oriented modernization for software-centric enterprises.

Where they typically fit in modernization programs:

Architecture-driven modernization efforts requiring strong engineering rigor and custom solution design.

Best fit when:

Modernization involves complex refactoring and product-grade engineering rather than standardized migration approaches.

Assessment & Application Intelligence

10. CAST Software

What they do in application modernization:

CAST Software provides deep application intelligence to analyze structure, dependencies, architectural risk, and technical debt across large application estates. Its tooling is commonly used to assess modernization readiness, quantify risk, and inform transformation strategy before execution begins.

Where they typically fit in modernization programs:

Upfront system comprehension, portfolio assessment, and decision support prior to modernization execution.

Best fit when:

Enterprises need objective insight into legacy systems to guide modernization scope, sequencing, and risk management.

11. Flexera

What they do in application modernization:

Flexera offers application portfolio assessment and rationalization capabilities to support modernization and cloud planning. Its focus is on inventorying applications, understanding usage and cost, and helping organizations prioritize modernization initiatives at an estate level.

Where they typically fit in modernization programs:

Portfolio-level assessment, rationalization, and modernization planning.

Best fit when:

Modernization decisions need to be driven by estate-wide visibility, cost considerations, and prioritization rather than application-by-application analysis.

Refactoring & Transformation Tooling

12. vFunction

What they do in application modernization:

vFunction focuses on architectural analysis and automated decomposition of large monolithic applications, particularly in Java-based environments. Its tooling helps identify service boundaries and refactoring paths as a precursor to broader modernization initiatives.

Where they typically fit in modernization programs:

Architectural refactoring and decomposition during the transformation phase.

Best fit when:

Teams need help breaking down complex monoliths before or alongside broader modernization efforts.

13. VMware Tanzu

What they do in application modernization:

VMware Tanzu provides platforms and tooling for building, deploying, and operating modern applications on Kubernetes. Its focus is on standardizing runtime environments and supporting cloud-native application development and operations.

Where they typically fit in modernization programs:

Runtime modernization, platform standardization, and cloud-native application operations.

Best fit when:

Modernization efforts center on Kubernetes adoption and standardizing how applications are built and run.

14. Amazon Q Developer

What they do in application modernization:

Amazon Q Developer is an AI-assisted development tool that helps engineers understand, modify, and generate code within development environments. It accelerates implementation work but operates at the code and developer workflow level.

Where they typically fit in modernization programs:

Implementation support during refactoring and code-level transformation.

Best fit when:

Engineering teams want AI assistance to speed up code changes as part of a broader, externally governed modernization effort.

15. Kiro

What they do in application modernization:

Kiro is an agentic AI development environment designed to bring more structure and repeatability to AI-assisted coding through spec-driven workflows and task decomposition. It improves control within AI-assisted development but remains focused on implementation rather than lifecycle ownership.

Where they typically fit in modernization programs:

Structured AI-assisted development during transformation.

Best fit when:

Teams want tighter control over AI-assisted coding without replacing broader modernization governance.

Automation-Led Modernization Platforms

16. LeapLogic

What they do in application modernization:

LeapLogic provides automation-driven tooling to accelerate legacy application modernization and cloud migration. Its approach focuses on reducing manual effort during transformation through automated analysis and conversion, particularly for defined legacy stacks.

Where they typically fit in modernization programs:

Automated transformation and migration within scoped modernization initiatives.

Best fit when:

Organizations are modernizing specific legacy systems and want automation to reduce effort and timelines within a bounded scope.

This list intentionally spans tools, services, and platforms. Each plays a role. The critical distinction lies in how much of the modernization journey they can realistically own and how much responsibility remains with the enterprise once delivery begins.

How CTOs Should Evaluate Modernization Claims in Practice

Once you strip away positioning language and demos, evaluating application modernization platforms and providers becomes far more straightforward than it initially appears.

Modernization claims tend to sound impressive early. The gap only becomes visible when you ask for proof of ownership beyond the narrow slice being demonstrated. That’s where the evaluation needs to shift from “what can you show” to “what can you stand behind”.

What to ask for

A serious modernization platform or provider should be able to produce concrete artifacts beyond just outputs.

- Ask to see system-level models that represent the application as it actually exists today. Not screenshots. Not summaries. A navigable view of dependencies, flows, and structural realities.

- Ask for transformation traceability. How does a modern component map back to legacy behavior? What decisions were made automatically, what required human intervention, and where were assumptions introduced?

- Ask for validation artifacts. Test coverage, contract alignment, and behavioral checks should exist as first-class outputs, not future promises. If correctness can’t be inspected, it’s being assumed.

- Ask for parity evidence. How is functional equivalence established and measured? What happens when parity fails? Vague assurances here usually mean risk has been deferred.

- Ask for deployment readiness. Actual evidence that the output is production-aware, environment-aware, and reversible if something breaks.

None of these requests is unreasonable. They’re what modernization requires once it moves beyond experimentation.

What not to accept

Some signals indicate responsibility is being shifted back to your teams, even if it’s not stated explicitly.

- Be cautious of prompt-led demos that showcase speed without showing structure. Fast output without visibility into correctness is not progress.

- Be wary of sample repositories used as proof points. Modernizing a curated example is very different from dealing with real-world entropy.

- Treat architecture slideware for what it is – a conversation starter, not evidence of execution.

And be especially careful when gaps are explained away with, “our consultants handle that.” That phrase almost always means ownership stops at the boundary of the platform or tool.

This is the point where the conversation either gets more honest. Platforms and providers that are built for full responsibility tend to welcome this level of scrutiny. Those optimized for speed or optics usually don’t.

The Modernization Lifecycle Most Vendors Don’t Own

Once you move past tools, demos, and delivery models, application modernization becomes easier to reason about. Not simpler, but clearer because it follows a lifecycle. And most of the problems enterprises run into happen when ownership drops somewhere along that path.

Full-lifecycle modernization isn’t about doing more things. It’s about carrying responsibility from the first meaningful look at a legacy system through to a modern application that can be deployed, operated, and trusted.

Each phase builds on the one before it. When any phase is weakened or skipped, the impact shows up later, often far from where the decision was originally made.

1. Comprehension

Modernization begins with understanding the system as it actually behaves. That includes dependencies, runtime flows, data access patterns, and undocumented logic that has accumulated over time.

When comprehension is shallow, transformation decisions are made without context. Hidden coupling surfaces late. Critical behavior is rediscovered in production. Teams end up modernizing symptoms instead of systems.

2. Assessment and strategy mapping

Comprehension informs strategy. This is where modernization decisions are made at the portfolio level on what to modernize, how to approach it, and in what order. These are not repo-by-repo guesses. They are tradeoffs between risk, value, and feasibility across an estate.

When assessment is treated as a one-time exercise or reduced to inventory, modernization drifts. Teams default to one-size-fits-all approaches, timelines stretch, and effort concentrates on the loudest systems rather than the most impactful ones.

3. Transformation

Transformation is where legacy systems are reshaped into modern architectures. This is not a mechanical translation. It involves architectural change, boundary definition, data model decisions, and the intentional handling of legacy constraints.

When transformation is reduced to syntax conversion or framework upgrades, legacy structure is preserved under modern tooling. The application looks new, but behaves the same way and carries the same limitations forward.

4. Validation

Validation is where modernization either earns trust or loses it. Functional parity, contract alignment, and behavioral consistency must be established deliberately, not assumed. Tests, diffs, and validation artifacts are how teams gain confidence that change hasn’t altered intent.

When validation is deferred or treated as an external concern, risk compounds quietly. Issues surface late, remediation becomes expensive, and rollout slows to a crawl because nobody is certain what they’re shipping.

5. Deployment and rollout governance

Modernization isn’t complete until it can be deployed safely. That means production-aware outputs, pipeline integration, cutover planning, and rollback readiness when assumptions break.

When deployment is treated as a separate phase owned by a different team, modernization stalls at the finish line. Systems sit “done” but unreleased, or move to production without safety nets, turning modernization into an operational gamble.

This lifecycle isn’t theoretical. It reflects where real modernization programs slow down, fracture, or fail outright. Most vendors and platforms participate in one or two phases effectively. Very few are designed to own the entire path.

That gap between participation and ownership is what shapes the rest of this conversation.

Also read: How Can Gen AI Drive Every Step of Your Modernization Journey?

Why We Built Legacyleap

Legacyleap wasn’t built in response to a single project or a specific tool gap. It came out of a recurring pattern across modernization programs that looked very different on the surface but incomplete in similar ways underneath.

The problem wasn’t effort or intent. Teams were doing the work. Vendors were delivering what they were scoped to deliver.

What kept breaking was continuity. Comprehension lived in one phase. Decisions were made somewhere else. Transformation happened in fragments. Validation was deferred. Deployment carried a risk that nobody fully owned. Each handoff introduced uncertainty, and over time, that uncertainty compounded.

What became clear was that modernization needed fewer moving parts, not more. And ownership had to be structural, not contractual.

That realization shaped how Legacyleap was designed. Not as a collection of tools stitched together, and not as an accelerator bolted onto services, but as a platform built around a few non-negotiable principles.

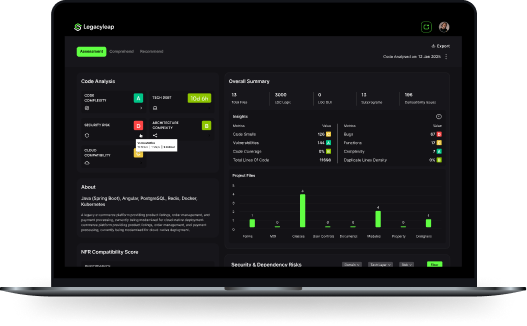

At a practical level, that means the platform is designed to carry modernization as a single, connected system, rather than a sequence of loosely coupled steps.

Legacy applications are first represented as system-level models. Transformation happens against those models in controlled, reviewable stages. Validation is generated alongside change, not layered on later, with a strong combination of Gen AI and Agentic AI’s capabilities and SMEs (human-in-the-loop). And outputs are produced with deployment and rollback realities in mind, not left as an exercise for downstream teams.

Those choices weren’t about sophistication for its own sake. They were about eliminating the gaps where modernization programs consistently slow down, lose confidence, or stall entirely.

At a higher level, the principles are simple.

- First, modernization has to be repeatable. Outcomes shouldn’t depend on which team happens to be staffed or how familiar they are with a particular system. The same inputs should lead to consistent results.

- Second, it has to be provable. Every transformation decision should be traceable. Every change should be explainable. Validation shouldn’t rely on trust or manual effort alone.

- And finally, it has to be safe. Modernization that accelerates change without reducing risk isn’t progress. If teams can’t deploy with confidence or recover when assumptions fail, the platform hasn’t done its job.

Legacyleap exists to carry responsibility across the full modernization lifecycle because that responsibility was being fragmented everywhere else. Not to replace tools or teams, but to give modernization a spine it could reliably move along.

We’ve written in detail about what full-lifecycle modernization looks like in practice, including how comprehension, transformation, validation, and deployment are handled as a single system, if you’d like a deeper look at the technical side.

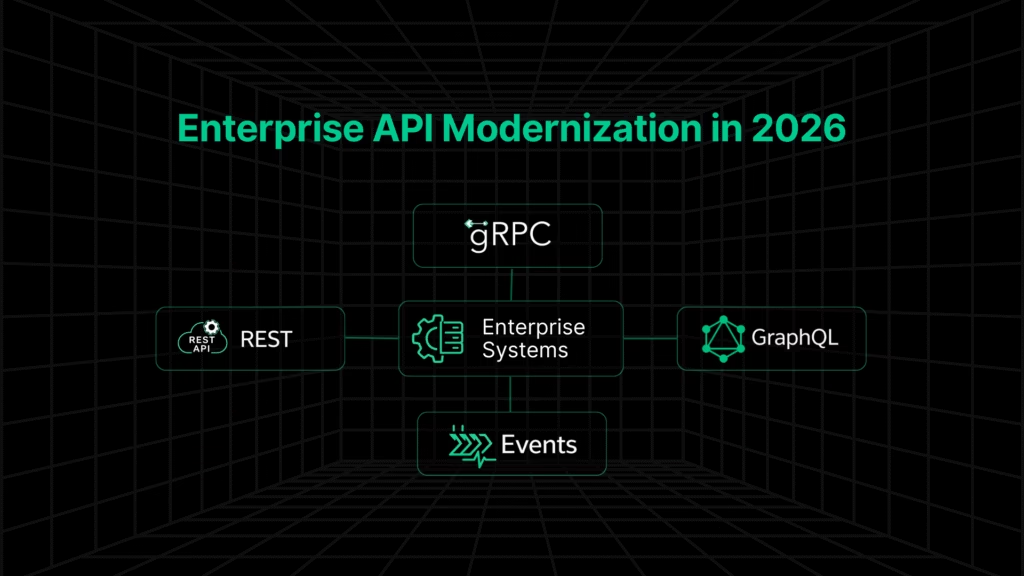

Where Application Modernization Is Headed In 2026

Application modernization is entering a more disciplined phase. The noise around tools, accelerators, and AI-led shortcuts isn’t going away, but tolerance for fragmented ownership is. As systems grow more interconnected and change carries higher stakes, speed alone stops being a convincing metric.

What starts to matter instead is lifecycle ownership. Who understands the system well enough to change it safely? Who can carry decisions forward without losing context? Who can stand behind outcomes rather than handing risk back to engineering teams once a phase is complete?

Validation will play a central role in that shift as a continuous mechanism for maintaining trust as systems evolve. The more complex modernization becomes, the less viable it is to rely on assumptions, manual review, or delayed assurance.

Over time, the category will narrow because enterprises will gravitate toward approaches that reduce coordination overhead and make responsibility explicit. Platforms and partners that can carry modernization end-to-end without collapsing under scale or complexity will naturally stand apart.

That’s the direction the market is already moving toward. Less fragmentation. Fewer seams. Clearer ownership.

Modernization doesn’t need more momentum. It needs more accountability.If you’re evaluating modernization options today and want to sanity-check how your approach stacks up against full-lifecycle ownership, we’re happy to walk through how Legacyleap approaches it in practice.

You can book a demo to see how comprehension, transformation, validation, and deployment are handled as a single, connected system.

FAQs

Most legacy modernization platforms price by activity (per application, per LOC, or per migration batch) rather than by lifecycle ownership. The key comparison is not cost alone, but which phases are included: comprehension, transformation, validation, and deployment readiness. Platforms that price only for assessment or code generation often push risk and service costs downstream. A more reliable model aligns pricing to clearly owned modernization phases, making scope and effort predictable.

Security and compliance go beyond access controls. Enterprises should look for traceability of changes, audit-ready transformation logs, controlled AI outputs, and validation artifacts that can be reviewed independently. Platforms that rely only on “human review” without system-level traceability introduce compliance risk, especially in regulated environments.

AI in modernization is most effective for discovery, dependency analysis, and transformation assistance, not autonomous end-to-end modernization. While AI can accelerate understanding and implementation, it cannot guarantee behavioral parity or deployment safety on its own. Credible platforms use AI within governed workflows that include system models, validation, and controlled execution.

Highly customized legacy systems are where many platforms struggle. Pattern-based tools and mechanical translation break down when logic is undocumented or tightly coupled. Handling customization requires system-level comprehension, behavioral modeling, and controlled transformation, not just code conversion. This is why many modernization efforts stall without strong validation and governance.

Most vendors measure productivity through velocity metrics like faster builds or reduced development time. More meaningful indicators include lower regression risk, faster onboarding, reduced change failure rates, and greater deployment confidence. Productivity gains are only credible when tied to structural improvements, not just faster code output.

Enterprises should validate ROI claims through evidence, not demos. This includes before-and-after system models, traceability reports, parity validation results, and deployment-readiness artifacts. Vendors that cannot produce auditable outputs should be treated cautiously, regardless of projected savings.